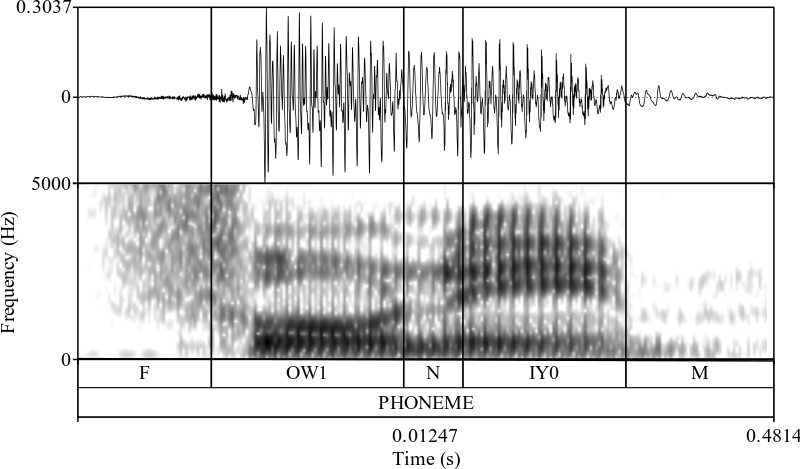

Linguists performing phonetic research often need to perform measurements on the acoustic segments that make up spoken utterances. Segmenting an audio file is a difficult and time-intensive task, however, so many researchers turn to computer programs to perform this task for them. These programs are called forced aligners, and they perform a process called forced alignment whereby the temporally align phonemes—the term used to refer to the acoustic segments in speech recognition literature—to their location in an audio file. This process is intended to yield an alignment as close to what an expert human aligner would produce so that minimal editing of the boundary locations is needed before analyzing the segments.

Forced aligners traditionally have the user pass in the audio files they want aligned, accompanied by an orthographic transcription of the content in the audio files and a dictionary that converts the words in the transcription into phonemes. The program will then step through the audio, convert it to Mel-frequency cepstral coefficients, and process those with a hidden Markov model based back end to determine the temporal boundaries of each phoneme contained within the audio file.

Recently, however, deep neural networks have been found to outperform the traditional hidden Markov model implementations in speech recognition tasks. But, there are few forced-alignment programs available that use deep neural networks as their back end. Those that do still rely on analyzing the hand-coded Mel-frequency cepstral coefficients instead of the speech waveform itself, even though convolutional neural networks can learn the features needed for discrimination of classes in a classification task.

Our lab is working to develop a new forced alignment program that uses deep neural networks as the back end and takes in raw audio instead of the Mel-frequency cepstral coefficients. By having the network learn features from the audio itself rather than use features determined before ever running the network, only features that are useful for the classification task will be used. Additionally, the methodology of training the network will be more generalizable to other tasks because there will not be a need to develop hand-crafted features as the input to the network.